Benchmark the AI performance of Android devices

September 21, 2020

Machine learning is powering exciting new features in mobile apps. Many smartphones now have dedicated hardware to accelerate the computationally intensive operations required for on-device inferencing.

Today, we’re launching the UL Procyon AI Inference Benchmark, a new test for measuring the performance and accuracy of dedicated AI-processing hardware in Android devices.

The benchmark uses a range of popular, state-of-the-art neural network models such as MobileNet V3, Inception V4, SSDLite V3 and DeepLab V4. The models run on the device to perform common machine-vision tasks. The benchmark measures performance for both float- and integer-optimized models.

The benchmark runs on the device's dedicated AI-processing hardware, with the Android Neural Networks API (NNAPI) selecting the most appropriate processor for each test. The benchmark also runs each test separately on the GPU and/or CPU for comparison.

UL Procyon benchmarks are designed for professional users. The AI Inference Benchmark is for hardware and software engineers who need independent, standardized tools to test the quality of their NNAPI implementations and the performance of dedicated AI hardware in Android devices.

- Benchmark and compare the AI inference performance of Android devices

- Tests based on common machine-vision tasks using state-of-the-art neural networks

- The benchmark measures both inference performance and output quality

- Compare NNAPI, CPU and GPU performance

- Verify NNAPI implementation quality and compatibility

- Use benchmark results to optimize drivers for hardware accelerators

- Compare float- and integer-optimized model performance

- Simple to set up and use on a device or via Android Debug Bridge (ADB)

Results and insights

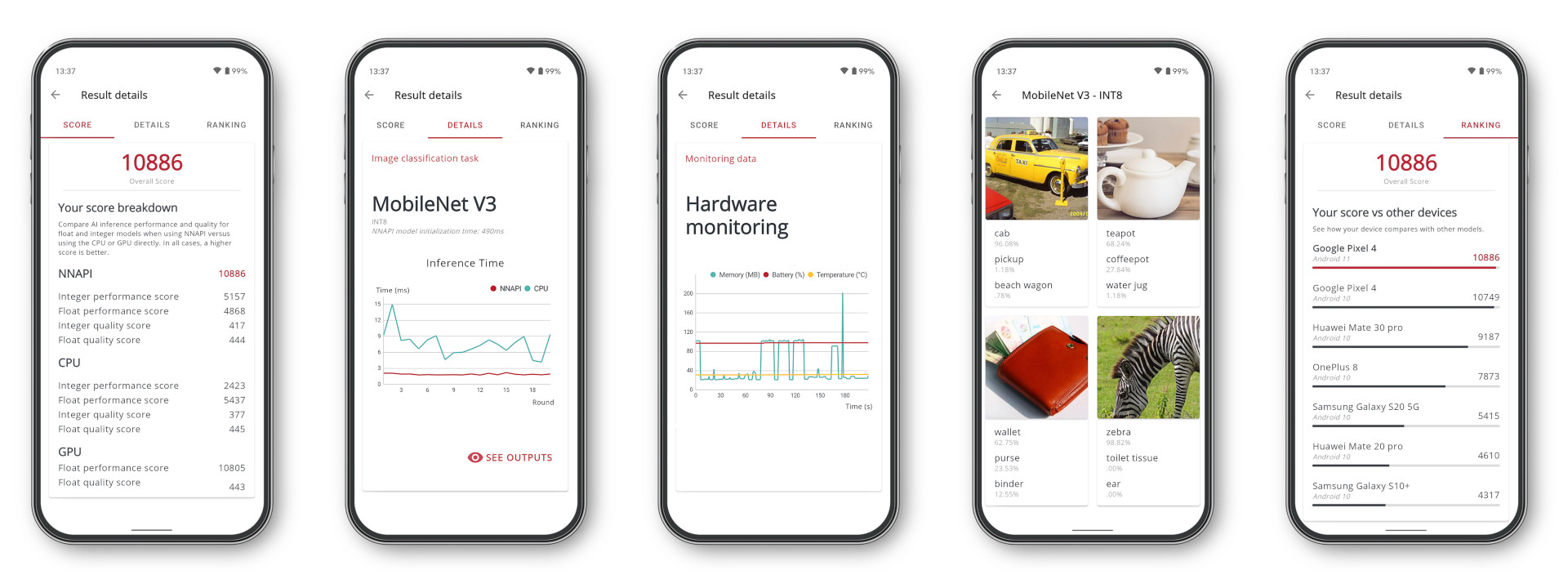

The UL Procyon AI Inference Benchmark provides a range of useful results, metrics and insights. Use the main benchmark score to compare the AI performance of Android devices. The score breakdown helps you compare the performance and quality of different processors with integer and float models. The in-app ranking list shows you how a device compares with other smartphones.

A chart for each test shows the inference time with NNAPI and other processors, making it easy to see the difference in performance. A hardware monitoring chart shows you how the device’s temperature, battery charge level and memory use changed during the benchmark run. You can also check the outputs from each model to ensure the hardware is returning the correct results.

The UL Procyon AI Inference Benchmark is easy to install and run with no complicated configuration required. You can start the benchmark on the device or via ADB. You can view benchmark scores, charts and rankings in the app and export detailed result files for further analysis.

Available now

The UL Procyon AI Inference Benchmark is available now. Site licenses for professional users start at $1,495 (USD) per year.

Look out for more UL Procyon benchmarks coming soon

Procyon is a new benchmark suite from UL that’s designed specifically for professional users in industry, enterprise, government, retail and the press. Each UL Procyon benchmark is developed for a specific use case and uses real applications where possible. UL works closely with its industry partners to ensure that every Procyon benchmark is accurate, relevant and impartial. Flexible licensing means you can pick and choose the individual benchmarks that best meet your needs. Find out more at https://benchmarks.ul.com/procyon.

Recent news

-

Procyon® AI Benchmarks Provide Full Coverage and Actionable Performance Insights

January 13, 2026

-

Procyon Labs launches with FLUX.1 AI Image Generation Demo

November 4, 2025

-

3DMark Solar Bay Extreme is available now!

August 20, 2025

-

3DMark Speed Way Teams up with PC Building Simulator 2!

July 17, 2025

-

New Procyon AI Benchmark for Macs now available

June 25, 2025

-

3DMark for macOS available now!

June 12, 2025

-

New Inference Engines now available in Procyon

May 1, 2025

-

Try out NVIDIA DLSS 4 in 3DMark

January 30, 2025

-

Test LLM performance with the Procyon AI Text Generation Benchmark

December 9, 2024

-

New DirectStorage test available in 3DMark

December 4, 2024

-

New Opacity Micromap test now in 3DMark for Android

October 9, 2024

-

NPUs now supported by Procyon AI Image Generation

September 6, 2024

-

Test the latest version of Intel XeSS in 3DMark

September 3, 2024

-

Introducing the Procyon Battery Consumption Benchmark

June 6, 2024

-

3DMark Steel Nomad is out now!

May 21, 2024